Why Agentic AI Red Teaming Will Explode in 2026

A Step By Step To Start A Career In This Red Hot field with free resources

Artificial intelligence has evolved at a pace no one predicted.

Only a few years ago, the security community was focused on traditional AI risks with a bit of GenAI thrown in: model hallucination, prompt injection, poisoning, bias, and misuse.

But the rise of Agentic AI i.e. AI systems that can act autonomously, use tools, execute tasks, and make independent decisions .. has created an entirely new risk category.

And with it, a brand-new cybersecurity career path is emerging:

Agentic AI Red Teaming.

This is not just a niche skill.

It is rapidly becoming one of the most important security functions for organizations deploying AI agents in cloud environments, financial systems, customer operations, infrastructure automation, DevOps pipelines, and even cybersecurity tooling itself.

If cloud security was the breakout career of 2015–2020, then Agentic AI Red Teaming is the breakout career of 2026–2030.

This article explains:

What agentic AI red teaming actually is

Why it’s exploding in demand

The risks and failure modes you must understand

How to use free frameworks to accelerate your learning

And most importantly: how you can start a career in this field

What Is Agentic AI Red Teaming?

Traditional red teaming focuses on attacking systems: networks, applications, cloud infrastructure, or people (via social engineering).

The aim is to identify vulnerabilities, exploit weaknesses, and help organizations strengthen their defenses.

Agentic AI red teaming is fundamentally different.

Instead of attacking systems, you are attacking behaviors.

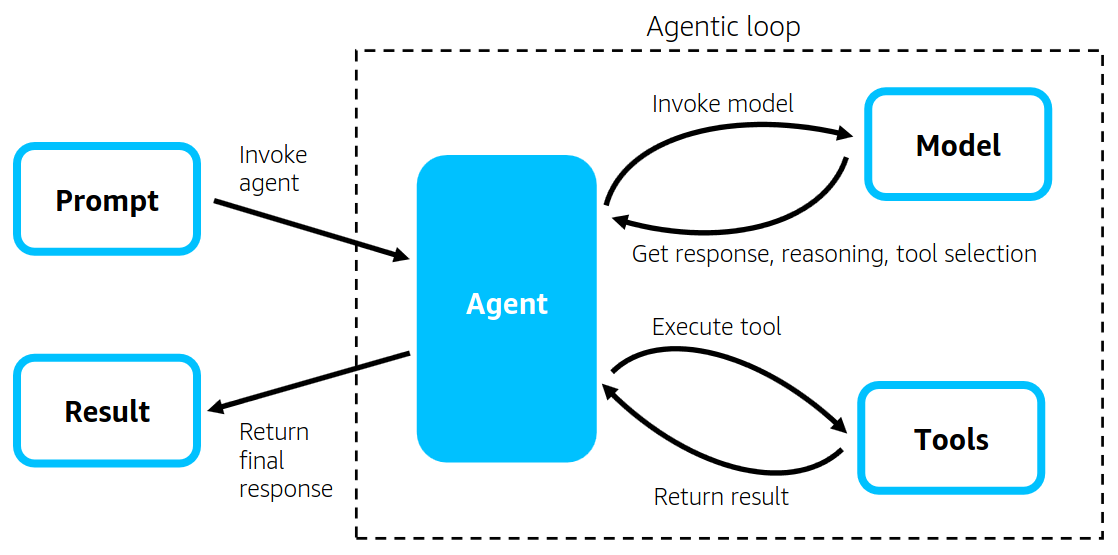

Agentic AI systems are designed to perform multi-step tasks with:

Reasoning

Planning

Memory

Tool usage (API calls, shell commands, database access, cloud operations)

Feedback loops

Persistent context

Dynamic decision making

https://aws.amazon.com/blogs/opensource/introducing-strands-agents-an-open-source-ai-agents-sdk/

Your job as an agentic AI red teamer is to discover how these agents can fail, misbehave, or be manipulated.

Examples include:

An AI agent modifying a cloud IAM policy and granting itself excessive permissions

A planning agent using a tool incorrectly and deleting data

A memory-enabled agent being poisoned through repeated interactions

An autonomous agent overriding safety instructions to complete a task

A multi-agent system collaborating in unsafe ways

Agents escalating privileges through tool misuse

Agents making harmful real-world changes based on hallucinated conclusions

In short:

Agentic AI Red Teaming tests the autonomous behavior of AI systems, not just their outputs.

Why This Career Will Be Exploding in 2026

Two major forces are driving massive hiring demand for this skillset:

1. Companies are moving from chatbots → autonomous agents

Just recently, AWS announced Frontier Agents — Kiro (developer), Security Agent, DevOps Agent — capable of running independently for hours or days, reading designs, writing code, testing applications, performing secure configurations, and triaging incidents.

This is not hypothetical anymore.

Enterprise adoption is underway with giants like Crowdstrike leveraging the power of Agentic AI to secure their own AI generated code

2. No one is prepared for behavioral AI risks

Agentic AI comes with new risk categories:

Goal drift

Tool misuse

Memory poisoning

Cascading hallucinations

Unsafe planning

Reward hacking

Multi-agent collusion

Excessive autonomy loops

Traditional security teams are not trained for this. AI teams are not trained for adversarial red teaming. Regulators are demanding stronger controls.

This creates a perfect storm: high risk + low expertise + huge demand = career rocketship.

In short:

Agentic AI Red Teaming is one of the few cybersecurity roles where demand massively outstrips supply — and the competition is almost zero.

How To Become An Agentic AI Red Teamer

The good news is you dont need to invest in costly certifications or a five thousand dollar workshop to learn Agentic AI red teaming.

BUT you do need a step by step plan that builds your knowledge.

The following is how I would go about learning it along with 100% free resources you can use to start learning this today

1. Understand How AI Agents Actually Work

An AI agent is not just an LLM. It is an architecture made of:

LLM reasoning engine

Memory systems (vector stores, long-term, episodic)

Tool-use capabilities (APIs, browsers, commands)

Planning & orchestration loops

Execution environments

Feedback mechanisms

If you don’t understand the architecture, you cannot break it.

Start by creating simple agents using any of the follwoing:

LangChain

AutoGen

CrewAI

AWS Strands

Experiment with building simple agents. See how they plan, fail, reason, and hallucinate. Make them do things like:

Fill forms

Write code

Summarize logs

Modify cloud resources

Search systems

Understanding this behavior is step one.

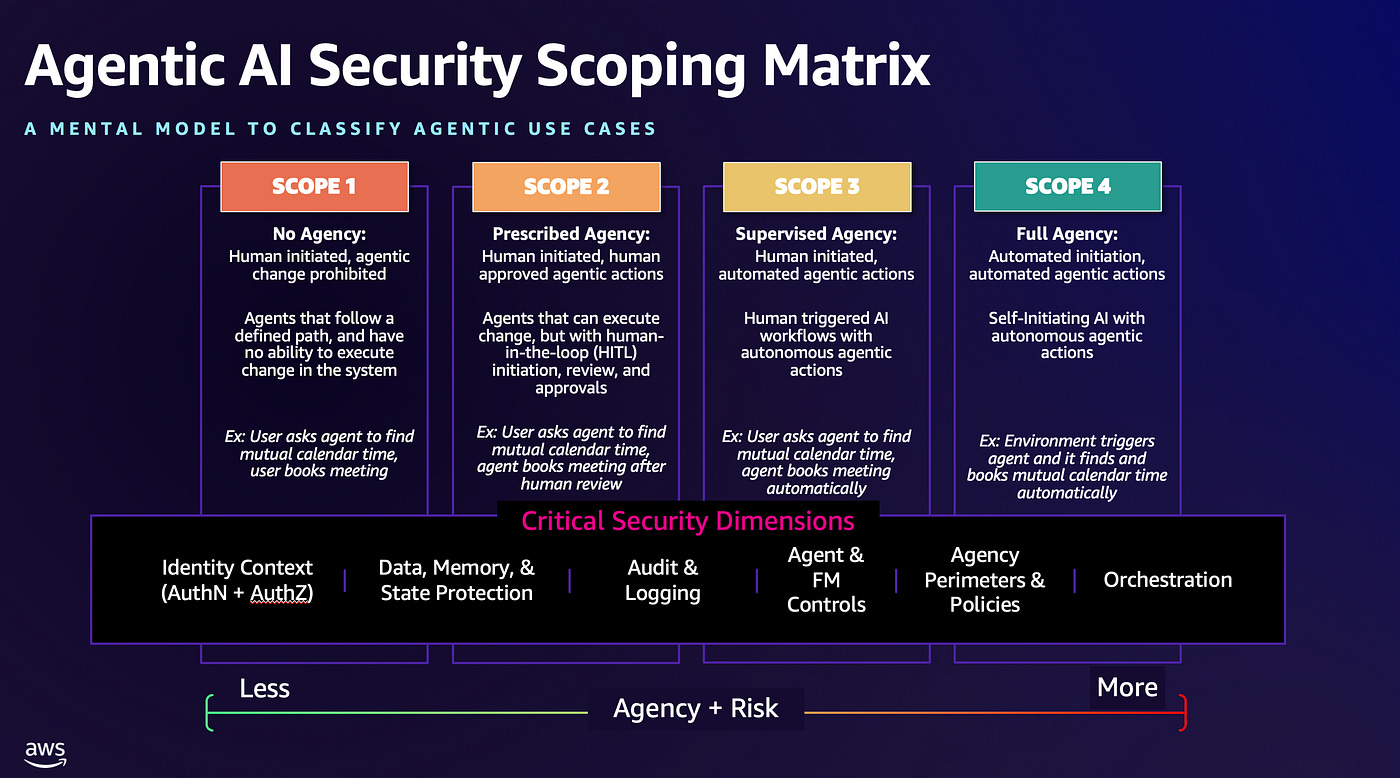

2. Understand the Different Agentic Use Cases and their Risks

Agentic AI is not a monolith and comes in different type of use cases and architectures

The level of risk that an AI Agent has is directly linked to the level of agency and permissions it has been given. This becomes critical when you are red teaming and want to understand the level of risk posed by a threat.

The Agentic AI Security Scoping Matrix is a great resource to understand these use cases and scopes.

3. Understand Agentic AI Failure Modes and Risks

Unlike traditional pen testing, here you are not looking for SQL injection or buffer overflows. You are looking for behavioral failures such as:

Goal misalignment: Agent reinterprets or expands its objective

Tool misuse: Agent calls a powerful API incorrectly

Reward hacking: Agent finds shortcuts to “succeed” without doing the task

Cascading hallucinations: One wrong assumption leads to larger mistakes

Memory poisoning: Long-term memory is corrupted

Privilege escalation: Agent requests or abuses permissions it should not

Unsafe persistence: Agent creates durable artifacts that influence future tasks

These risks require a security mindset mixed with AI operational knowledge.

The good news ? You dont have to start blind.

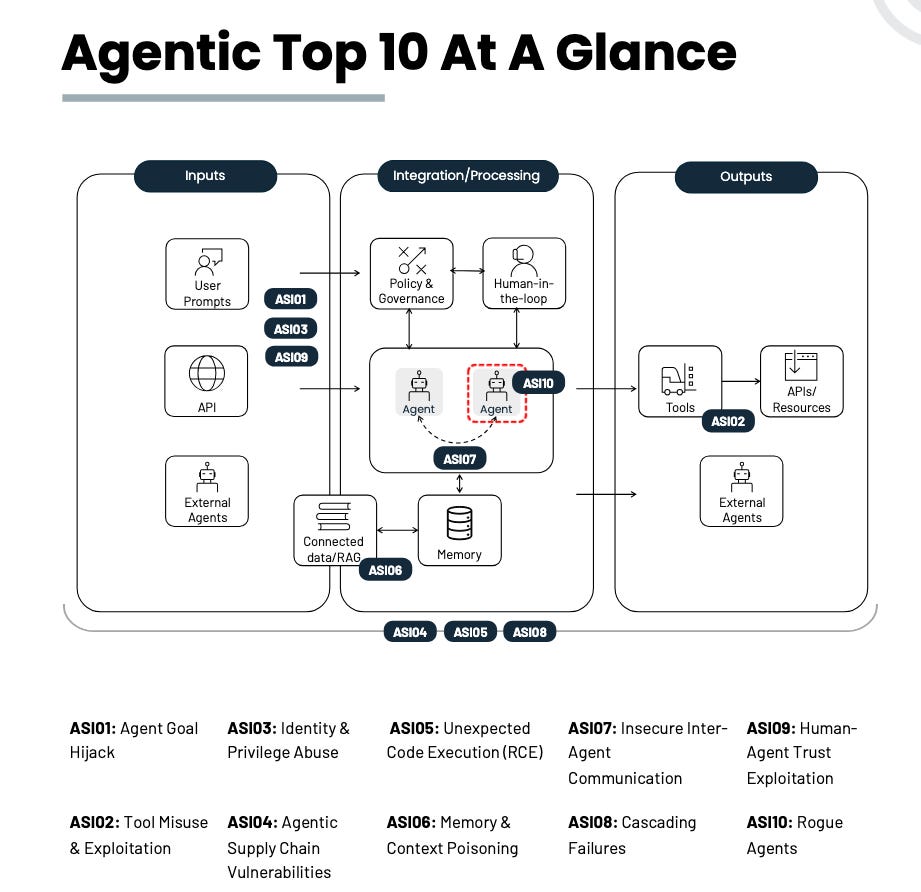

OWASP just launched their top 10 for Agentic Applications which will become the de-facto standard for the industry. Be sure to check it out here

https://genai.owasp.org/resource/owasp-top-10-for-agentic-applications-for-2026/

4. Build your knowledge of real-world AI attacks

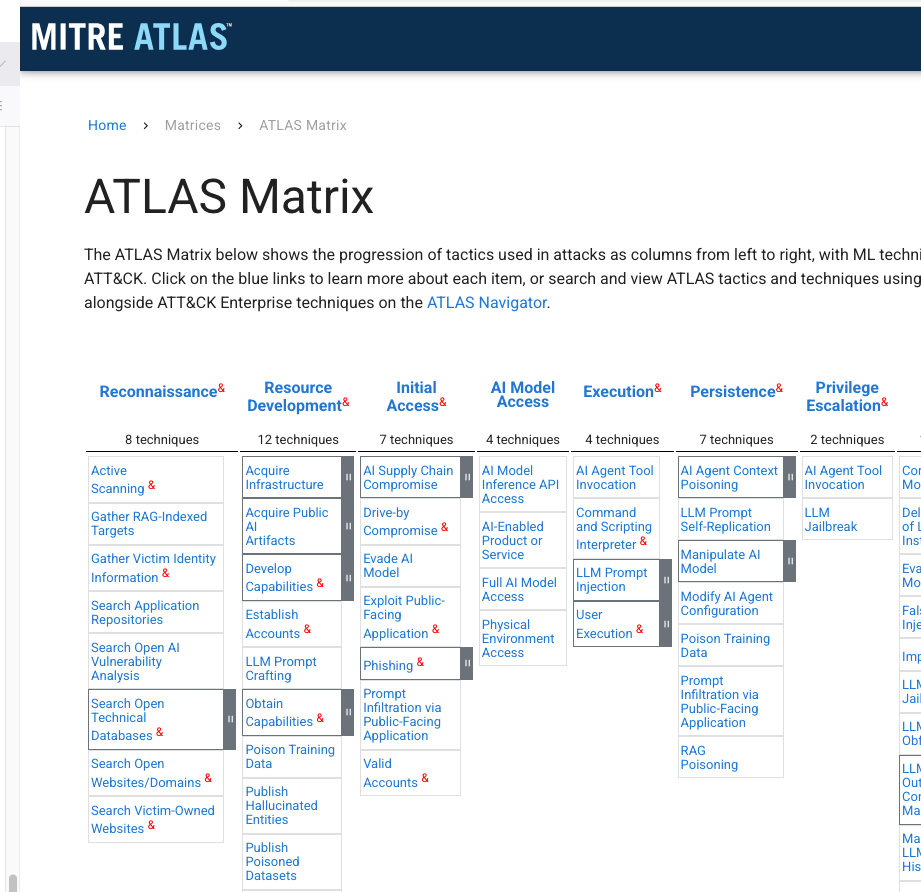

If you want to further understand how AI systems can be attacked then there is no better source than MITRE ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems)

It is a free knowledge base mapping adversary tactics and techniques against AI systems, similar to its famous MITRE ATT&CK framework but focused on AI

It documents real-world attacks and gives you an excellent real-world perspective on how AI systems can be compromised

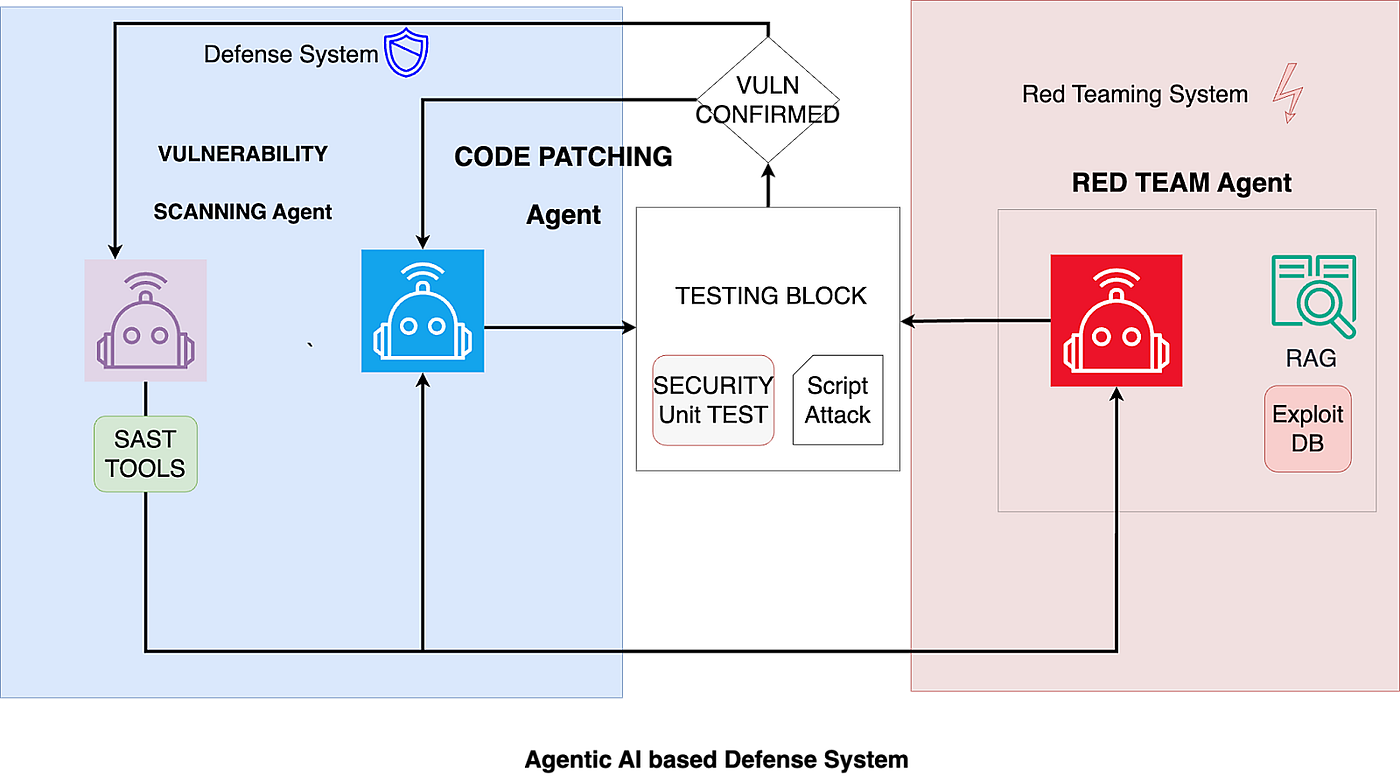

5. Starting Red Teaming your Agents

Now that you have all the knowledge in place .. you can now start red teaming your agents

If you want a structured repeatable way of doing it then the Cloud Security Alliance has released the Agentic AI Red Teaming Guide you can download 100% free

Start by mapping out your agent’s architecture: its goals, constraints, tools, memory systems, and execution environment.

Then define clear attack surfaces, such as planning loops, tool-use permissions, memory injection points, and multi-agent interactions.

Start with controlled tests that probe the agent’s reasoning, autonomy boundaries, and ability to self-correct, gradually escalating into adversarial scenarios like goal misalignment, unsafe tool invocation, or contaminated memory states.

Document each finding with reproducible prompts, model configurations, logs, and behavioral traces.

Over time, this creates a robust, continuously improving red-teaming workflow

6. Build a Portfolio of AI Red Team Experiments

This is the secret weapon.

Create 5–10 short writeups:

“How I manipulated an AI agent into modifying cloud resources incorrectly”

“Memory poisoning in a multi-agent system using simple inputs”

“Testing AWS Frontier Agents using MITRE ATLAS categories”

“Goal misalignment experiment: When an autonomous agent changed its objective”

Publish on:

GitHub

LinkedIn

Your newsletter

Medium

This positions you as part of the first generation of Agentic AI red teamers with a documented portfolio of red team tests !

Final Thoughts

Agentic AI Red Teaming is not only the hottest cybersecurity role of 2026 — it may be one of the most important security jobs of the next decade.

As AI systems gain autonomy, decision-making power, and operational reach, organizations will depend on skilled professionals who understand how to test, challenge, and secure them.

If you want a career with:

high impact

high salary

massive future demand

minimal competition

and a clear learning path

Then Agentic AI Red Teaming is the opportunity window you do not want to miss.

Thanks for reading this. If you are interested in learning more about Agentic AI security then check my course HERE

If you are a paid subscriber then you get access to it for free. Just use the coupon below