How Agentic AI Will Reshape Cybersecurity Teams by 2027

Will Agentic AI be a co-worker or a tool for Cybersecurity teams?

If you are sick about hearing the term “Agentic AI” then I have bad news for you ..

Agentic AI is not going away anytime soon.

Agentic use cases are already actions, making decisions, and coordinating multiple steps across tools and environments. Doing what were once full-time human responsibilities.

What does this mean for Cybersecurity professionals ?

This shift won’t eliminate cybersecurity jobs altogether — but it will change their shape, focus, and value.

Roles like SOC analyst, DevSecOps engineer, and security architect are being redefined.

Those who adapt will thrive. Those who don’t may find themselves sidelined.

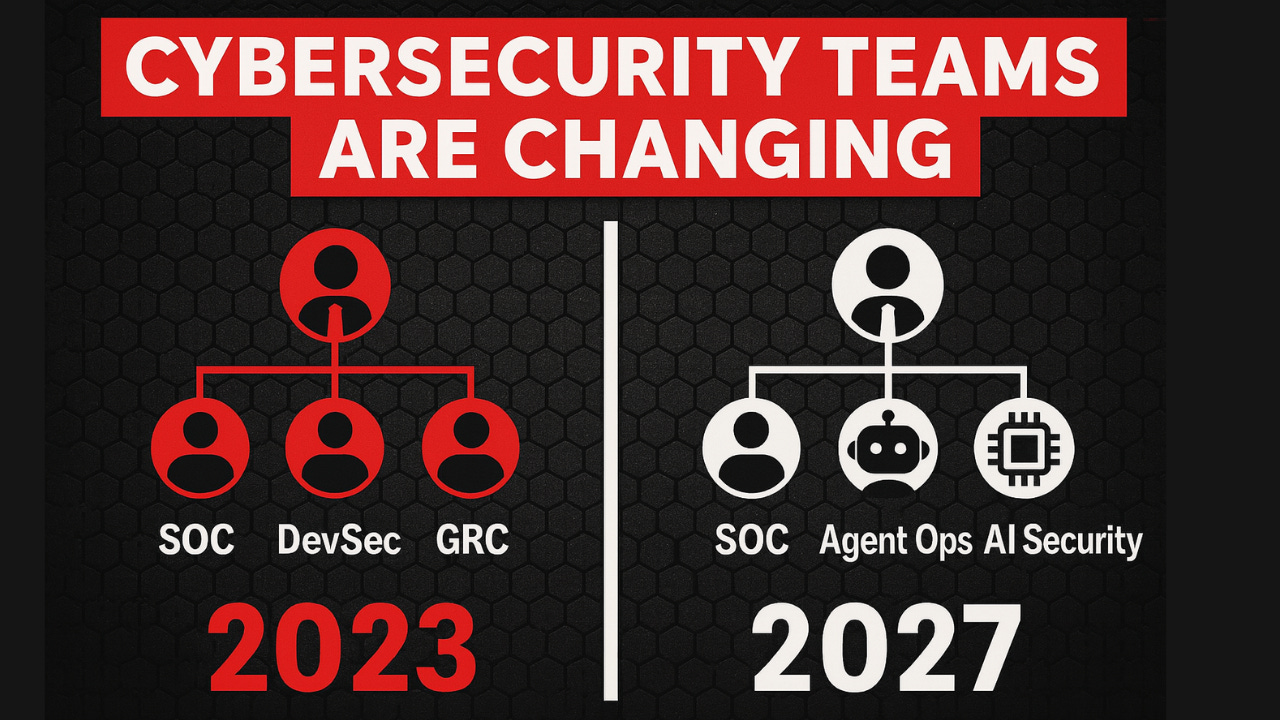

Here’s how cybersecurity teams are being reshaped by Agentic AI in the next 3 years and how you can prepare.

How Cybersecurity Roles Are Evolving

Let’s break down a few common cybersecurity roles and how Agentic AI is transforming them in 2028.

1 — The SOC Analyst: From Alert Triager to AI Supervisor

What’s Changing:

The classic Level 1 SOC analyst role — monitoring dashboards, escalating alerts, writing detection queries — is rapidly being automated.

Agentic AI can ingest logs, correlate alerts, and even generate risk-scored incident summaries faster than a junior analyst ever could.

Example:

By 2028, a SOC team at a financial institution uses an agent stack that:

Ingests CloudTrail, EDR, and DNS logs in real-time

Detects anomalies using self-improving detection agents

Creates a JIRA ticket with root cause and remediation recommendations

Executes basic containment via integration with XDR platforms

A junior SOC analyst is no longer needed to perform these steps.

Instead, the human role becomes supervisory: reviewing agent output, tuning agent guardrails, and focusing on nuanced investigations.

How to Prepare:

Learn prompt engineering for security analysis

Get fluent in interpreting outputs from LLMs and agentic platforms

Build skills in agent validation, bias detection, and false positive management

Learn how to create your own Agents using frameworks like CrewAI or AWS Strands.

2 — DevSecOps Engineer: From Pipeline Integrator to AI-Orchestrated Architect

What’s Changing:

DevSecOps used to be about writing security policies in code, embedding scanners in CI/CD, and automating guardrails.

Agentic AI now does much of this on its own — reading cloud architecture diagrams, identifying risks, and writing IaC policies automatically.

The DevSecOps engineer’s role evolves from doing these integrations manually to designing agent-driven pipelines, validating changes, and managing escalation workflows.

How to Prepare:

Learn how multi-agent frameworks work and how to make agents talk to each other

Focus on governance, explainability, and agent accountability in DevOps environments

Shift left will remain but now it will include things like prompt templates and specifications

3. The Security Architect: From Design Gatekeeper to Agent Governance Leader

What’s Changing:

Security architects traditionally focused on system-level design, threat modeling, and architecture reviews.

Now, Agentic AI can propose architectures, simulate attack paths, and even implement mitigations.

But here’s the catch: agent-driven systems introduce new attack surfaces — LLM hallucinations, agent takeover, misaligned goals.

Architects are no longer just protecting systems. They must secure autonomous AI behavior.

The security architect is now responsible for:

Ensuring the agent’s access permissions are scoped and auditable

Designing oversight mechanisms to prevent rogue actions

Implementing guardrails like rate limits, approval steps, and behavioral boundaries

How to Prepare:

Study AI alignment and safety frameworks (e.g., NIST AI RMF, OWASP LLM Top 10)

Gain expertise in RBAC for agents, agent sandboxing, and behavioral monitoring

Learn policy-as-code tools for AI behavior enforcement

Lead design reviews that include AI agent threat modeling and misuse scenarios

4 — Governance, Risk & Compliance (GRC) Analyst: From Policy Admin to AI Oversight Architect

What’s Changing:

AI is transforming how compliance is managed.

Agents can scan environments, flag non-compliant resources, and even suggest remediations based on ISO/NIST frameworks.

But GRC pros are now expected to go beyond checklists — they must understand how to govern AI agents themselves.

How to Prepare:

Get familiar with AI-specific compliance frameworks (e.g., NIST AI RMF, ISO/IEC 42001)

Learn how to understand audit logs for misaligned agent behavior and biased decisions

Understand the intersection of AI ethics, compliance, and security policy

How to Future-Proof Yourself Today

Learn AI-native security tools — Get hands-on with CrewAI, OpenAI Assistants and Bedrock agents.

Practice securing agent workflows — Set up simulated scenarios where you govern agents performing security tasks (e.g., rotating secrets, scanning containers, fixing misconfigurations).

Build and publish projects — Create GitHub repos showing how to integrate Agentic AI into security workflows. This boosts your visibility and showcases your adaptation.

Stay updated with standards — Follow NIST, ENISA, and OWASP guidance on AI security and autonomous systems.

Shift your mindset — Don’t compete with AI. Partner with it. Focus on roles that require judgment, empathy, creativity, and accountability

The 2028 Cybersecurity Org Chart: Humans + Agents

The structure of cybersecurity teams is shifting to accommodate human-agent collaboration.

Here’s a simplified view of how a mid-sized security team might look in 2028:

CISO

|

----------------------------------

| |

Human Security Leads Agent Operations Lead

| |

--------------------- ---------------------

| | | | | | |

SOC DevSec GRC AI Security Alert Threat Agent

Lead Lead Lead Architect Agents Intel Guardrail

(L1) Agents DesignersSome of the key changes you can expect are:

Agent Operations Lead: Oversees all AI agents involved in cybersecurity tasks.

Human Leads still exist — but manage hybrid teams (humans + AI agents).

AI Security Architect: Designs systems to secure the behavior of agents.

Agent Guardrail Designers: Build safeguards around autonomous actions.

AI-First Roles (Alert Agents, Intel Agents): Do repetitive security work at scale.

More cross-functional integration — e.g., GRC working with PromptOps, DevSec collaborating with Prompt Engineers.

Final Thought

By 2028, cybersecurity won’t be “AI versus human” — it will be “AI-augmented human teams” led by professionals who understand both the technical and operational dynamics of Agentic AI.

The org chart will shift. Job descriptions will evolve. But one thing remains: security will always need human judgment.

The difference is that tomorrow’s security teams will be defined by those who know how to design, govern, and collaborate with AI — not compete with it.

That's awesome, I've already have a similar agentic system for augmenting cybersecurity for now! Of course but our ideas really aligned!!!

Thanks for sharing🙏