Agentic AI Is Not Just Hype — How Cybersecurity Teams Can Get Ready

Agentic AI Without the Hype: A Guide for Cybersecurity Professionals

Let’s be honest: you’ve seen enough “AI will replace everyone” headlines to last a lifetime.

This article is not that.

Once ChatGPT burst onto the scene in 2022, companies underwent several distinct phases.

Phase 1 was the “chat wrapper” era.

Companies wanted to quickly POC GenAI solutions and put in some nice demos with clunky LLM calls.

Some worked, and most failed

Phase 2 brought Agentic AI: systems that plan, call tools, keep memory, and take multi-step actions.

Exciting… until you try to move to production. Then you hit the same walls everyone else does:

Who is the agent allowed to be, what can it do, how do we observe it, and how do we prove it behaved?

Many organizations stall in proof-of-concept/pilot purgatory because those answers aren’t designed up front.

In fact a recent MIT study stated that most AI projects are doomed

I tend to disagree .. I believe we are moving past the hype phase and into actual reality in Phase 3

This phase is where the game is truly changing due to enterprise-level Agentic Platforms and Model Context Protocol (MCP).

Let us take a look at how

What finally changes the game

Two shifts are helping companies move past the hell of AI proof of concept phases :

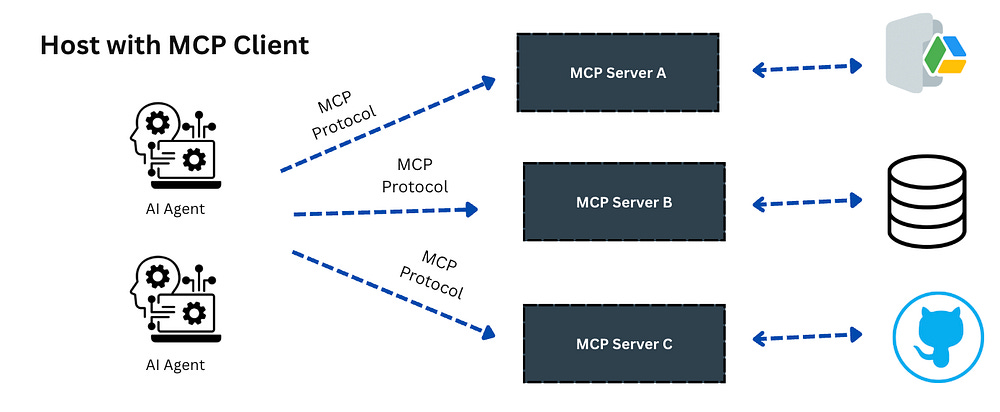

1 — Model Context Protocol (MCP)

MCP is like USB-C for agents — a standard way to expose tools and data safely to AI Agents.

Instead of clunky AI calls for each agent, we now have a standardized way to expose tools and data to AI Agents, greatly increasing their scope and reach ( and also the risks ! )

Imagine an AI agent that reads your security standards on Confluence via MCP then designs your cloud architecture based on that ?

2 — Enterprise Level Agentic Platforms

AI Agents as I mentioned were great in POC but lacked the necessary maturity for production level work

Things like identity controls, session isolation, logging etc. .. all of which are standard for security teams to give that green tick for go-live

Now we have platforms merging that bake in what these prototypes lack —

The recent launch of Amazon AgentCore is an example of this

Organizations now have the ability of launching Agentic AI into live production environments with all the best practices in place for security, identity and observeability in place

This is why cybersecurity teams need to stop kidding themselves

Agentic AI is not going away anytime soon .. it is only the over-hyped scenarios that are now going away

If you want to future-proof yourself then you and your cybersecurity teams need to start learning Agentic AI today

A Field Guide For Cybersecurity Teams

Here is a quick guide to upskill your teams when it comes to secure Agentic AI systems.

Step 1 — Pick one safe use case

The best way to learn Agentic AI is to implement one yourself

Don’t start with “automate my whole SOC.”

Pick one small, low-blast-radius job where the agent can’t do much harm if it fails.

Examples: summarize weekly vuln reports, draft Jira tickets from scan results, and prepare changelog notes.

Write down guardrails: inputs, outputs, data sensitivity, what the agent must never touch, and who approves results.

Teams that start this way learn faster and avoid getting stuck in PoC hell.

Step 2 — Scope the permissions

Before prompts and models, sort identity and permissions.

Start with read-only access; add permissions as needed.

If it ever acts on behalf of a user, require consent and step-up auth for sensitive actions.

Step 3 — Extend functionality with Tools

Expose internal APIs to the agent through a Model Context Protocol (MCP).

Most Agents do not work in isolation, and your team will learn how to extend the functionality of an agent.

Step 4 — Make every step observable

Enable logging of the agent, including prompts, tools, etc.

Pipe traces to your SIEM, allowing you to start understanding patterns and agent-based style flows.

Step 5 — Treat memory like a database (because it is)

Agents need memory for context, and this also becomes an attack surface.

Set retention/TTL, classify/encrypt, and track who/what wrote to memory.

The recent OWASP “Securing Agentic Applications Guide” is a great resource that covers this.

Step 6 — Have an Agent IR playbook

How exactly do you stop/kill a rogue or compromised agent?

Create a rough playbook for revoking credentials and terminating sessions if you need to stop an agent from functioning

Start small and slowly update it with your learning over time

Where the Industry Is Headed

2025 is when the Agentic AI genie is truly out of the box

MCP and platforms like Amazon Bedrock Agentcore will make Agentic AI mainstream, with cybersecurity having a significant role in these conversations.

There is no AI apocalypse coming, but there is going to be a massive disruption and a high demand for security professionals who are literate in agentic risks

Teams that implement identity, observability, tool governance, and memory security now will ship useful agents faster — and sleep better when they do.

I hope you are ready for the new and crazy world ahead !