A Deep-Dive Into The Google Secure AI Framework And Why You Should Use It ..

Is this the most underrated AI Standard in 2024 ??

2024 seems to be year for AI regulations across the globe

We have the EU AI Act which is an absolutely monster of a regulation that will assess AI systems based on their level of risk

The NIST AI Risk Management Framework is also starting to gain traction across the globe

Not to mention the recent release of the ISO 42001 standard that helps companies create a management system for AI initiatives similar to ISO 27001

While all these frameworks and regulations are great .. one beef I have is that they can be maddeningly “high-level” and not give the granularity that one needs for mitigating AI risks

This is where the Google Secure AI Framework (SAIF ) stands out

I have seen surprisingly little talk about this framework as it seems to have been overshadowed by the other standards we have mentioned

In my opinion SAIF is the perfect compliment to these standards and something which every organization serious about AI risk should consider adopting

Let’s talk a look !

What is the Google Secure AI Framework ?

Described as a “conceptual framework for secure AI systems” , the SAIF focuses more on AI security than other standards and draws from Google’s own extensive experience in secure software development .

As per their own words

“ .. SAIF is designed to help mitigate risks specific to AI systems like stealing the model, data poisoning of the training data, injecting malicious inputs through prompt injection, and extracting confidential information in the training data.

As AI capabilities become increasingly integrated into products across the world, adhering to a bold and responsible framework will be even more critical.”

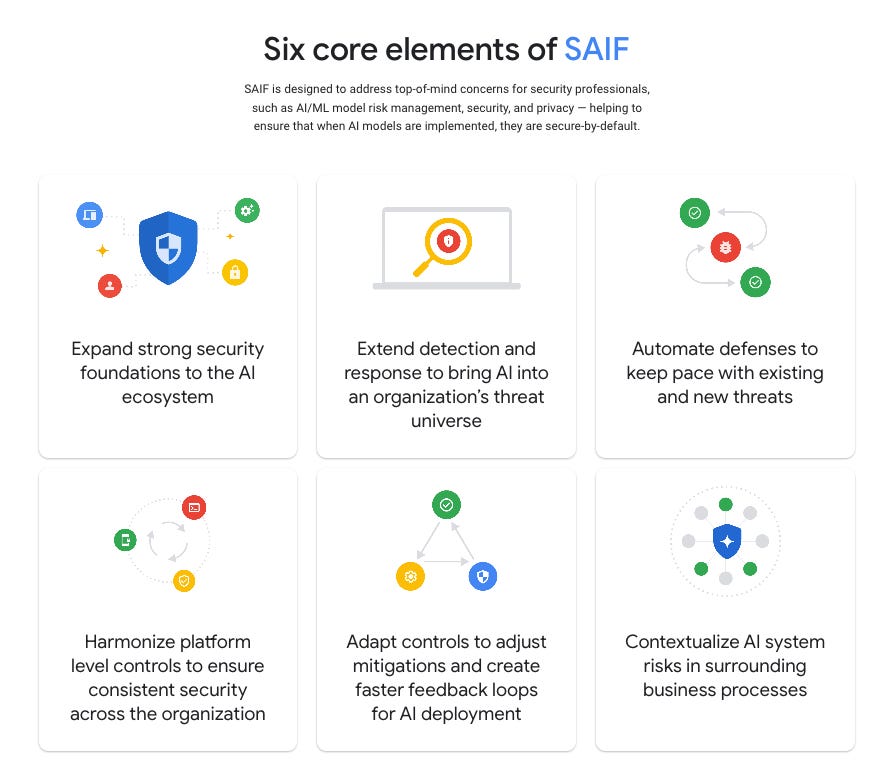

The Framework divides AI security concerns into the following six core elements:

1. Security Foundations Expansion

What It Means: Assess and upgrade your current security practices to cover AI technologies. Think of this as extending your security perimeter to include the AI landscape within your organization. For example .. extending input sanitization techniques for SQL injections to now cover Prompt Injections

Actionable Advice: Conduct a gap analysis on existing security measures to identify where enhancements are needed for AI components. Ensure encryption, access control, and data protection measures extend to AI data and models.

2. Detection and Response Extension

What It Means: AI systems may face unique threats, such as model tampering or data poisoning. Update your threat detection and incident response plans to address these AI-specific challenges.

Actionable Advice: Incorporate AI threat scenarios into your incident response drills. Use AI-based tools for faster detection of anomalies within AI operations.

3. Defense Automation

What It Means: Leverage AI to strengthen your defenses, but maintain human oversight for critical decision-making processes. Automate routine security tasks to allow human experts to focus on more complex challenges.

Actionable Advice: Deploy AI-driven security solutions for real-time threat analysis and response, but set parameters for human intervention in sensitive or critical decisions.

4. Platform Control Harmonization

What It Means: Ensure that security controls are uniformly applied across all AI and non-AI systems to avoid gaps. Consistency is key to preventing vulnerabilities due to control fragmentation.

Actionable Advice: Standardize security protocols and tools across the board. Use a centralized management platform for a holistic view of all security controls, including those for AI.

5. Control Adaptation

What It Means: Regularly update and refine your security controls to keep pace with evolving AI technologies and threat landscapes. This includes proactive measures like Red Team exercises and staying abreast of emerging threats.

Actionable Advice: Schedule periodic Red Team assessments focused on AI vulnerabilities. Encourage continuous learning among your security team about new AI threats and defense mechanisms.

6. Risk Contextualization

What It Means: Understand the specific risks associated with AI applications within your business processes. This involves integrating AI risk considerations into your overall risk management strategy.

Actionable Advice: Develop an AI risk assessment framework tailored to your organization's use of AI. This should account for the data AI models use, the decisions they influence, and their integration within business operations.

As you can see .. Google SAIF provides some great actionable advice for CISOs to improve their AI security posture

How To Implement Google SAIF In Your Organization

As per Google, implementing SAIF into practice will involve the following four steps

1 - Understanding AI Use: Emphasizes understanding the specific business problem AI is intended to solve, which influences security and governance needs.

2 - Assembling a Team: Stresses the importance of a multidisciplinary team to cover the diverse aspects of AI system development, including security, privacy, compliance, and ethical considerations.

3 - AI Primer: Recommends educating the team on AI basics to ensure a common understanding of the technologies, methodologies, and risks involved.

4 - Applying SAIF's Six Core Elements: This is where we enforce the six core elements mentioned previously

But a key question

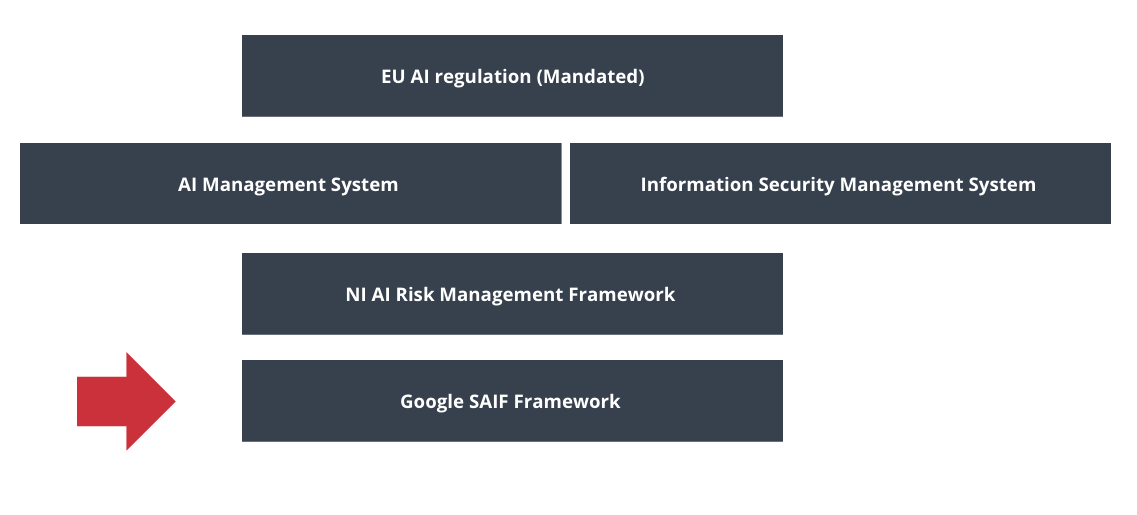

Where does SAIF fit for companies that are already planning to comply with the EU AI Act and the NIST AI Risk Management Framework ??

The good news is that Google SAIF is designed to compliment and not replace these frameworks

Looking at the below .. SAIF would fit somewhere at a lower level and feed data into these other frameworks due to its more granular focus on AI Security

Let’s take a few examples:

SAIF’s emphasis on expanding security foundations and contextualizing AI system risks aligns with the EU AI Act's requirement for risk assessment and mitigation strategies, ensuring AI systems comply with safety and fundamental rights protections.

Adapting controls for novel threats and contextualizing risks within business processes are practices that directly support the NIST AI RMF's iterative risk assessment process. This iterative approach is essential for managing the dynamic risks associated with AI technologies.

So summing it all up .. SAIF's holistic approach to security can be a great help for CISOs to develop an AI risk management strategy that not only addresses the technical and security aspects required by the NIST AI RMF but also the ethical and rights-based considerations central to the EU AI Act.

Check it out and let me know your thoughts in the comments below !